Eneko's Digital Garden

Powered by 🌱Roam GardenImproving Deconvolution of fMRI Signal with Sparse Paradigm Free Mapping Using Stability Selection

Scope of this work

The main goal of this work is to estimate neuronal-related activity without any prior information about the timings of the BOLD events. To do this, several algorithms have been proposed in the literature and they all solve the problem by means of regularized least-squares estimators with - and -norms (Gitelman et al. (2003); Khalidov et al. (2011); Karahanoǧlu et al. (2013); Hernandez-Garcia and Ulfarsson (2011); Gaudes et al. (2011)).

However, there are no algorithms that select an optimal regularization parameter; which is key for accurate estimation. Thus, this work introduces two improvements over the previous Sparse Paradigm Free Mapping (SPFM) (Caballero Gaudes et al. (2013)) algorithm:

The addition of a subsampling approach called Stability Selection that avoids the selection of the regularization parameter;

A modified formulation that allows us to estimate innovation signals in addition to the activity-inducing signals.

Formulation

In the original SPFM formulation, for a given voxel, the fMRI signal is explained as the convolution of the haemodynamic responde function (HRF) and the activity-inducing signal . Gaussian noise is also considered. As we know the activity-inducing signal is sparse, we estimate it by means of regularized least-squares with an penalty.

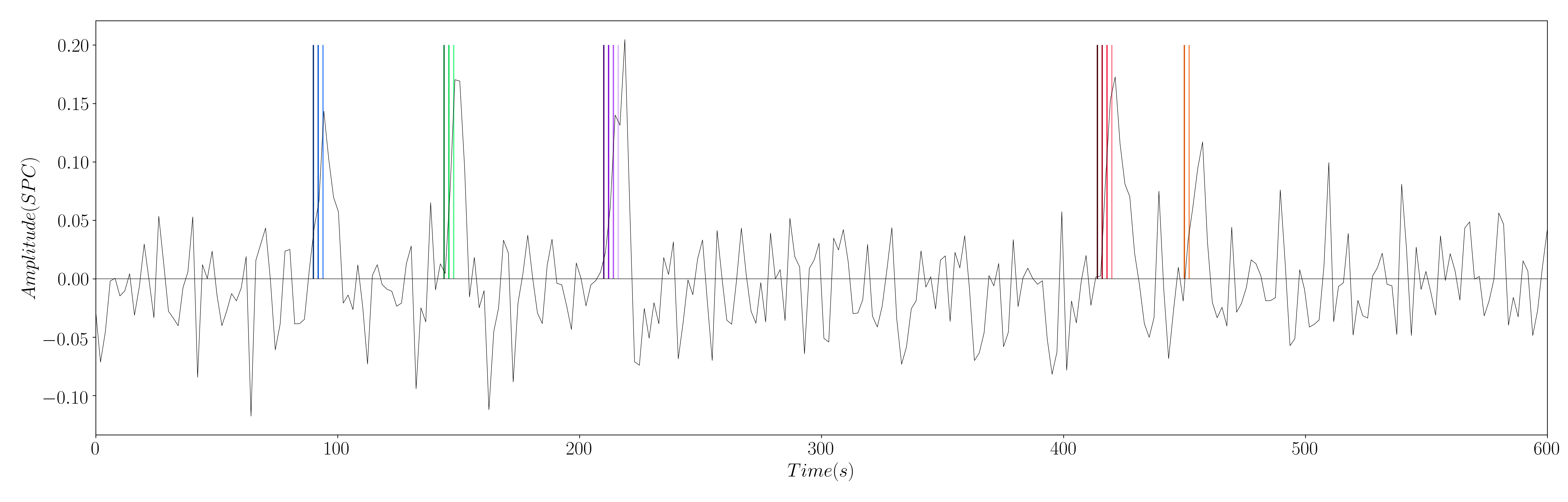

Figure 1: Simulated signal with activity-inducing signal of the 5 different simulated neuronal-related events.

Yet, this work introduces a modification to this formulation: the estimation of the innovation signal , which is the derivative of the activity-inducing signal; i.e. . In order to estimate the innovation signal, we add an integration operator into our design matrix , and we solve the same regularized least-squares problem (Cherkaoui et al. (2019)).

where

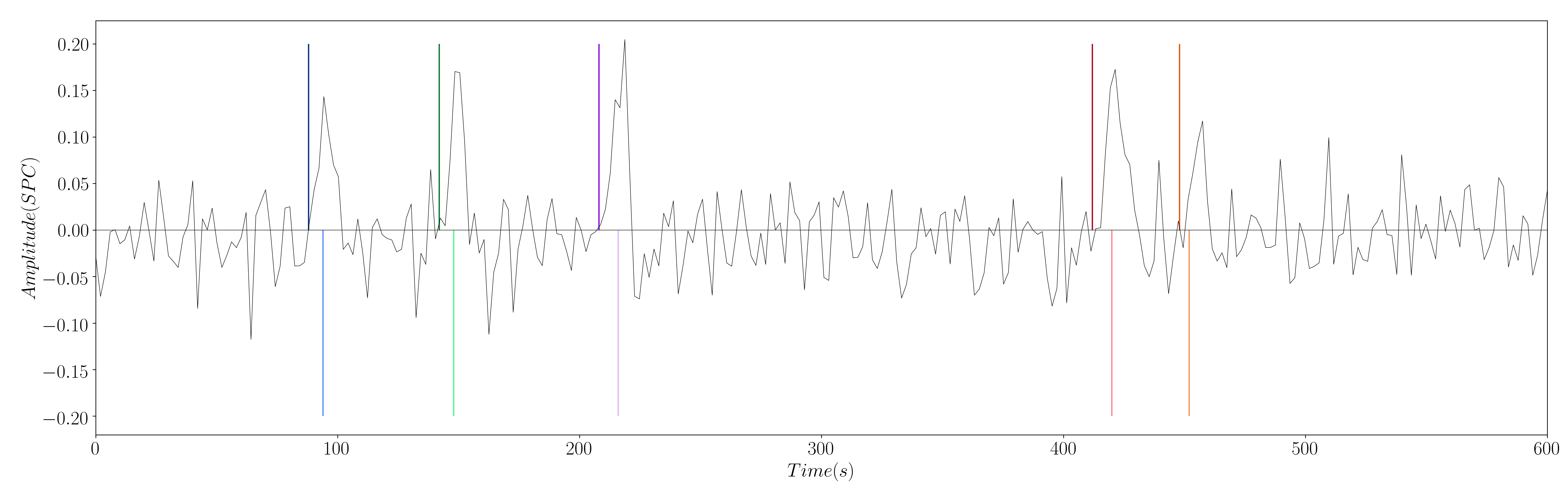

Figure 2: Simulated signal with innovation signal of the 5 different simulated neuronal-related events.

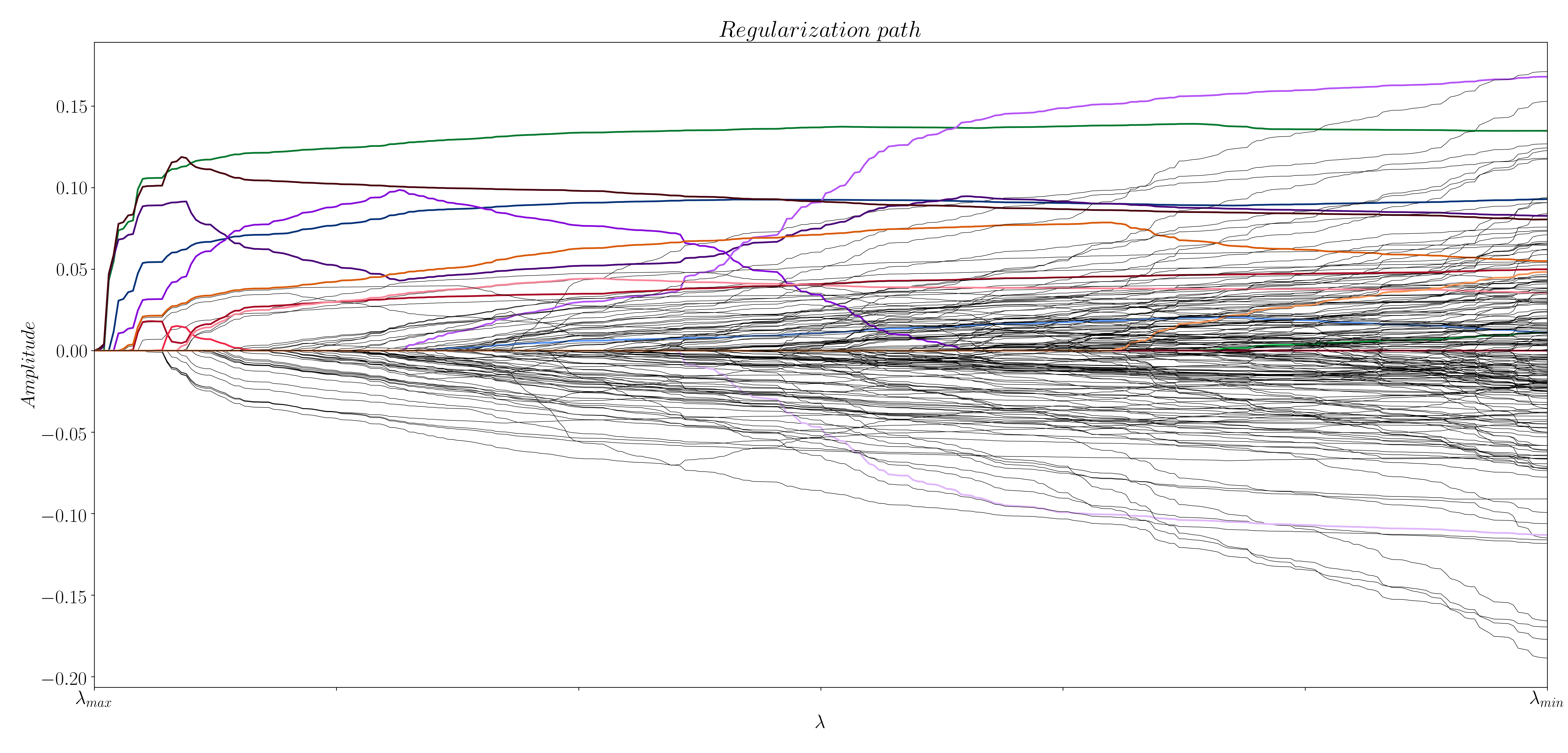

Regularization path

The selection of the regularization parameter is key for an optimal solution when solving optimization problems. This can be clearly seen when computing the regularization paths with the Least Angle Regression algorithm (Efron et al. (2004)) (see Figure 3 and 4), which can be done with the following lines of code:

import matplotlib.pyplot as plt

from sklearn.linear_model import lars_path as lars

# After importing data and hrf matrices

data_voxel = data[:, 0]

nscans = data_voxel.shape[0]

nlambdas = nscans + 1

# Compute regularization path

_, _, coef_path = lars(hrf, np.squeeze(data_voxel), method='lasso',

# Plot regularization path

plt.plot(coef_path.T)

plt.show()

Figure 3: Regularization path for activity-inducing signals.

Figure 4: Regularization path for innovation signals.

In the case of the activity-inducing signals, most of the events from the previous example appear at a high value of , while a few of them are buried in the lower values of . In the case of the innovation signals, it is not clear what the optimal is. So, what is the value of that yields the optimal solution? That's where stability selection (Meinshausen and Bühlmann (2009)) comes in.

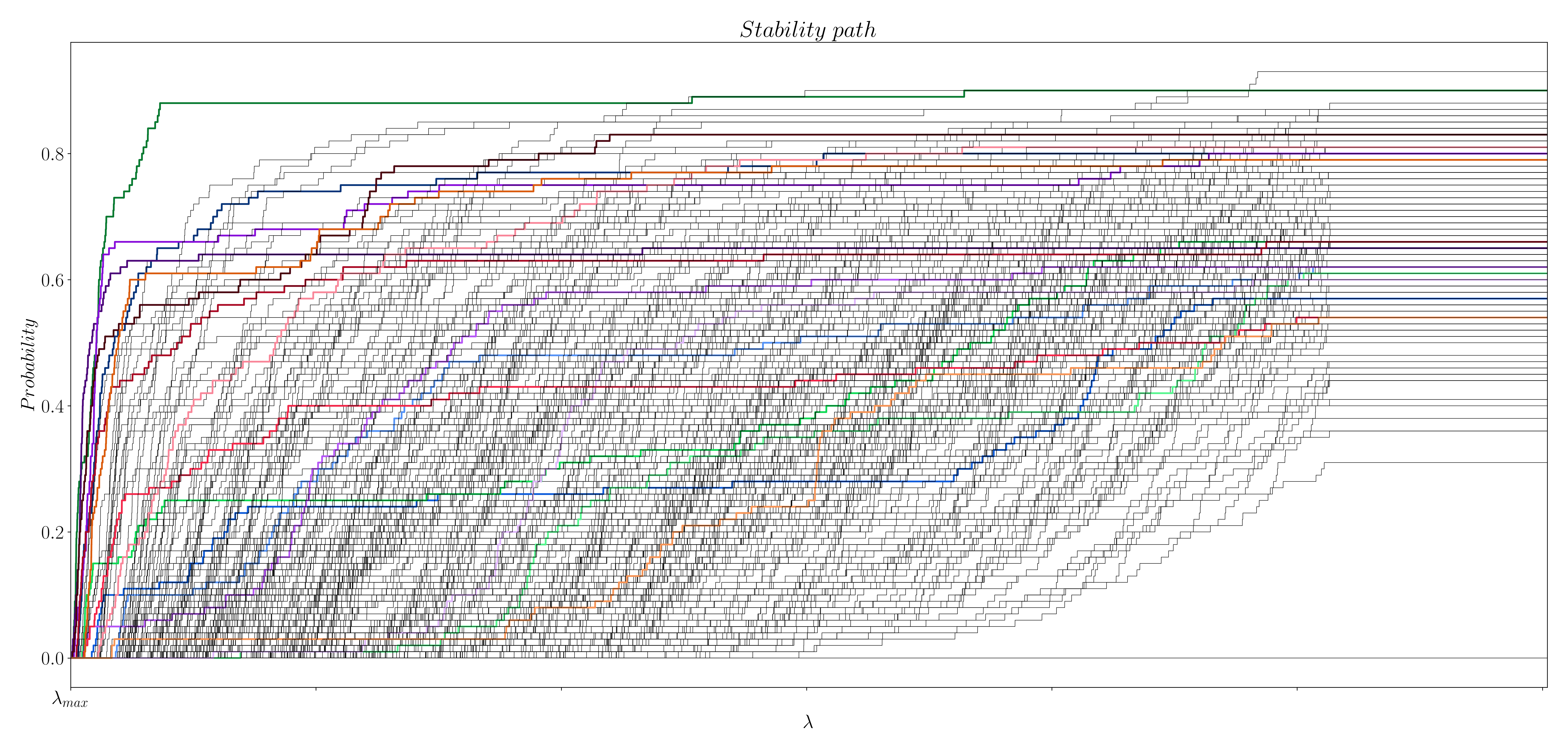

Stability Selection

Following the stability selection procedure (Meinshausen and Bühlmann (2009)), we generate 100 subsampled surrogate datasets that randomly keep 60% of the time-points of the original data and the design matrix . Then, we solve the optimization problem in (1) and compute the regularization path for each of the surrogates datasets using LARS. Once we have compute the regularization path of each surrogate dataset, we project the paths into a space with shared values of . This step is necessary given the different values of that LARS (Efron et al. (2004)) finds for each surrogate. Finally, we calculate the probability of each coefficient (i.e. time point) being non-zero for each value of . This last step lets us build the probability paths shown in Figure 5 and 6. The code to compute the stability selection with LARS can be found here.

Figure 5: Stability path for activity-inducing signals.

Figure 6: Stability path for innovation signals.

However, we still have to find/select the true coefficients from the stability paths. Unlike the original approach (Meinshausen and Bühlmann (2009)), which suggests to keep all coefficients above a given threshold, we suggest to calculate the area under the curve (AUC) of the paths of each time point as follows:

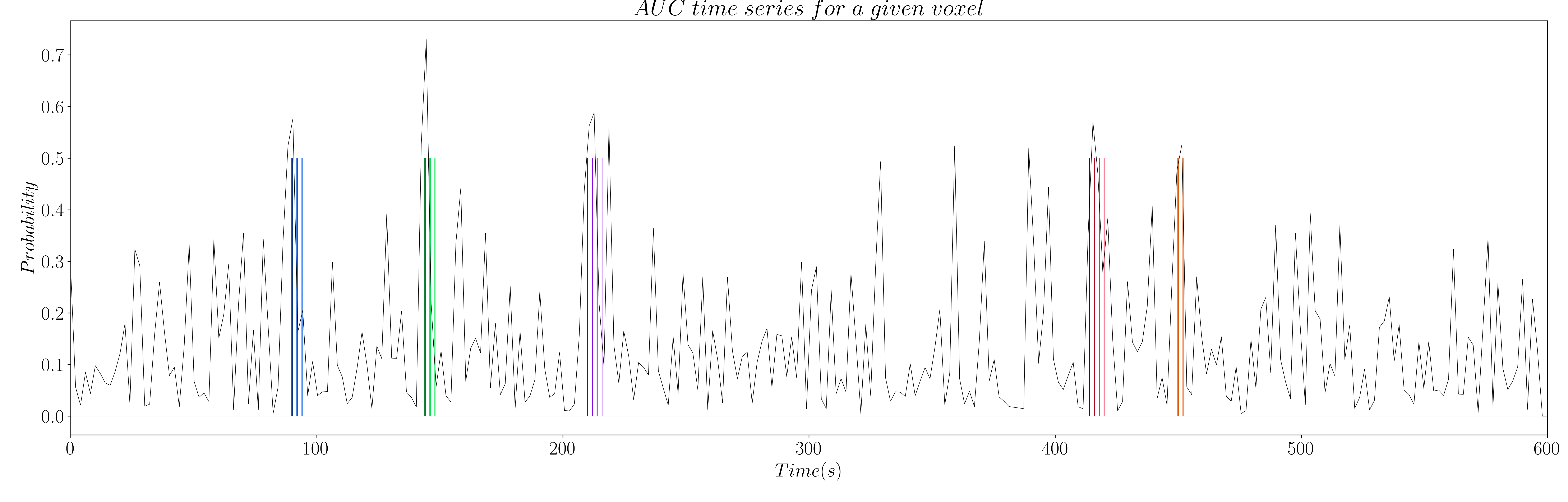

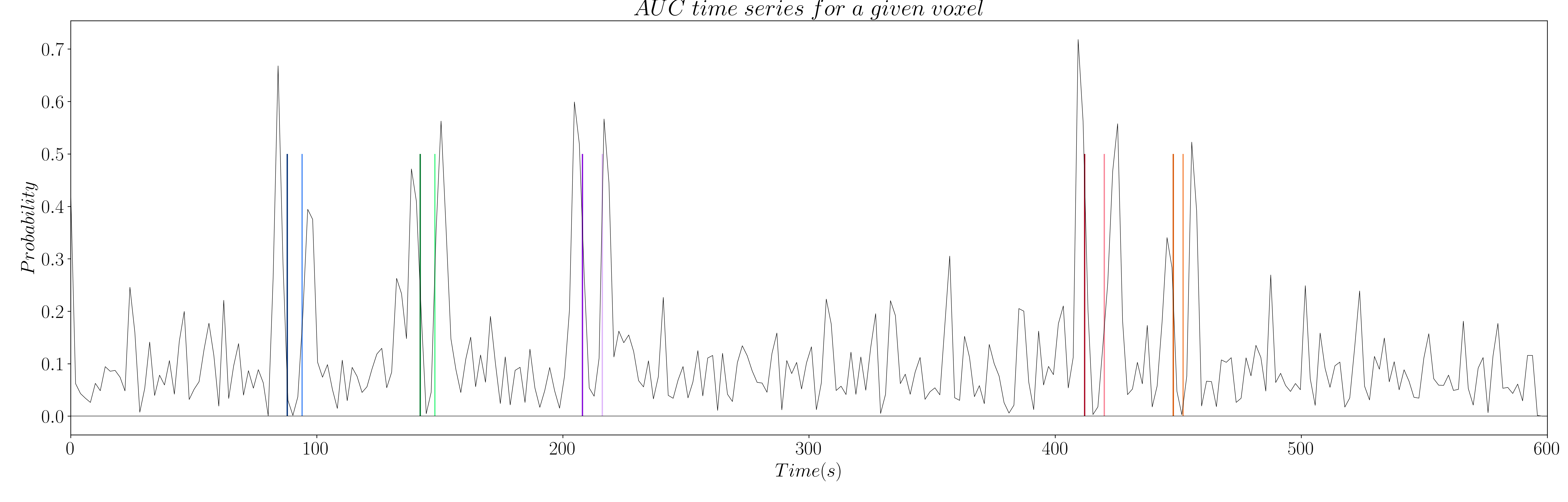

The computation of AUC yields a time series for each voxel that is related to the probability of a coefficient (i.e. time point) being non-zero (see Figure 7 and 8).

Figure 7: AUC time series for activity-inducing signals.

Figure 8: AUC time series for innovation signals.

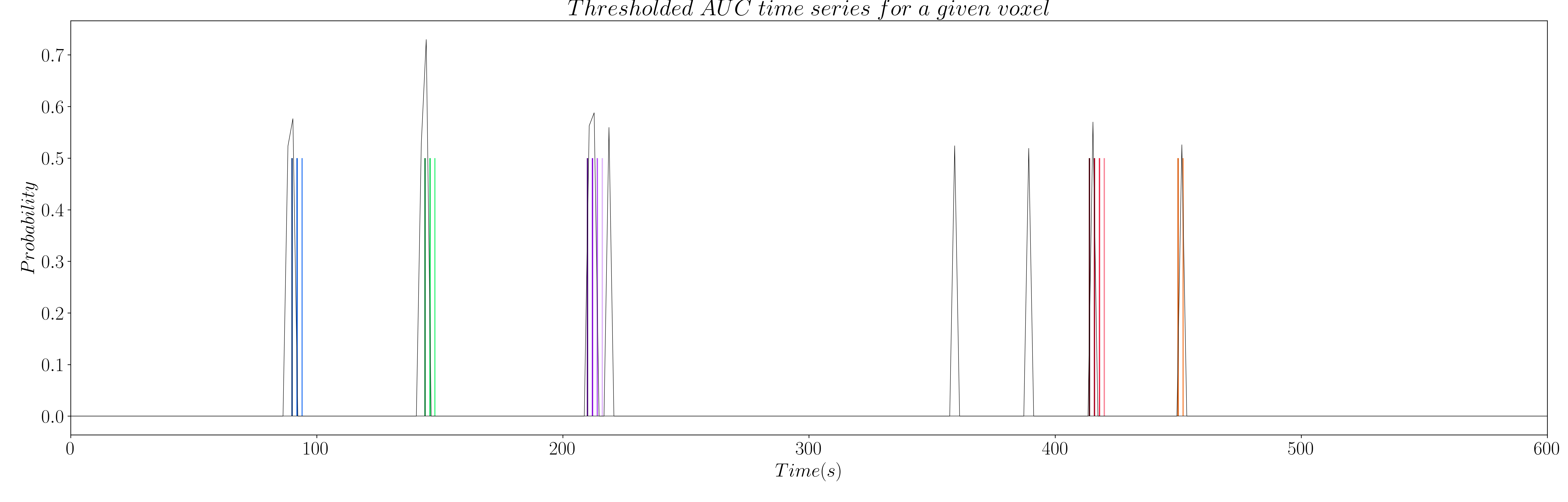

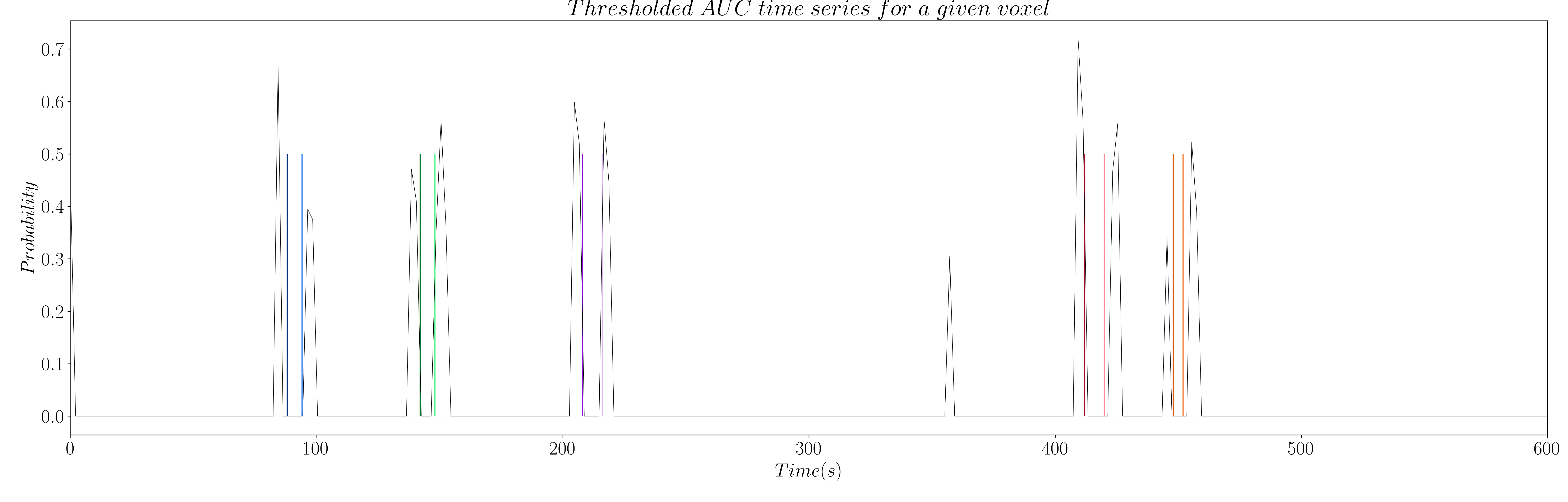

However, the AUC is non-zero by definition, which means we have to set a threshold to only keep the estimations of true neuronal-related events. Hence, we propose to threshold the AUC time series with the 99th percentile of the AUC time series of a region of non interest (see Figure 9 and 10); e.g. the deep white matter, as we do not expect top find any BOLD events in this region.

Figure 9: Thresholded AUC time series for activity-inducing signals.

Figure 10: Thresholded AUC time series for innovation signals.

Once we have thresholded the AUC, we perform a final debiasing step with the selected non-zero coefficients to get our estimates of the activity-inducing signal and the innovation signal as follows:

Rather, in the signal model with the innovation signal (2), the selected non-zero coefficients of are used to define a matrix whose columns are activation segments with piecewise constant unit between two non-zero coefficients of (Zoller et al. (2018)).

Benchmarking

We tested the two improvements with a finger tapping paradigm that contained trials of single and multiple taps, as shown in Figure 11.

Figure 11: Estimates of the neuronal-related events for a finger-tapping paradigm in a high SNR scenario. Each column shows a different moment in time of the finger tapping paradigm, with the three columns on the left belonging to single-tap events while the three columns on the right belong to multi-tap events.

Two fMRI datasets were acquired with a 7 Tesla scanner:

High SNR scenario: TR = 500 ms, voxelsize = 3x3x3 mm3;

Low SNR scenario: TR = 2800 ms, voxel size = 1.2x1.2x1.2 mm3.

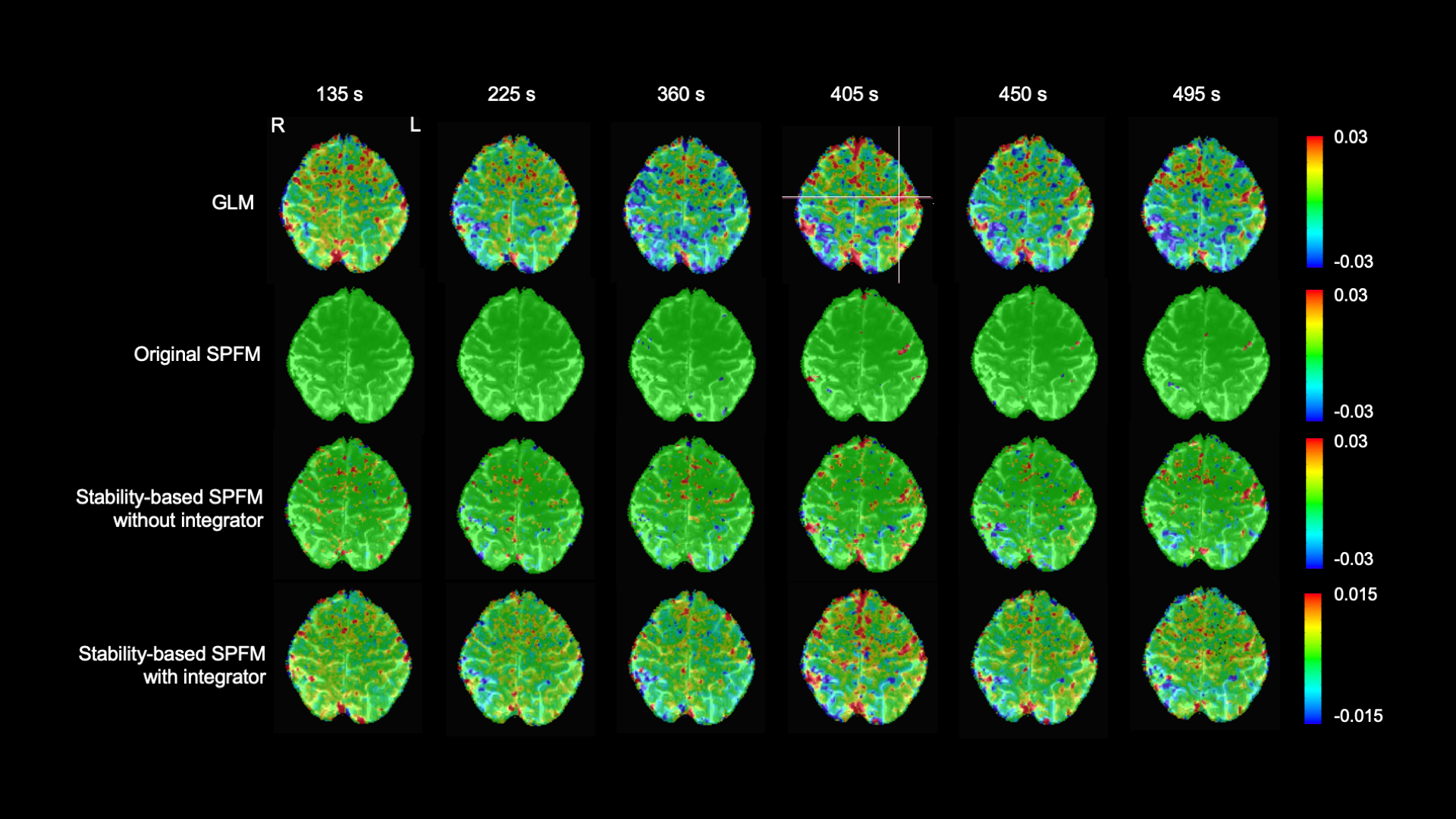

We compared the 4D maps produced with the proposed stability-based SPFM approach with the maps obtained with the original SPFM and a conventional single trial GLM analysis. Video 1 shows a segment of the AUC time series for one of the two datasets.

Video 1: segment of the AUC time series for one of the finger tapping datasets.

In the high SNR scenario, we can see that all three SPFM approaches are able to correctly estimate the different finger tapping eventsM. ore interestingly, the addition of the integration operator that allows us to estimate the innovation signal yields maps that are close to the gold standard of the GLM analysis. However, the amplitude of the estimations of the innovation signals is considerably lower.

Figure 12: Estimates of the neuronal-related events for a finger-tapping paradigm in a high SNR scenario. Each column shows a different moment in time of the finger tapping paradigm, with the three columns on the left belonging to single-tap events while the three columns on the right belong to multi-tap events.

Yet, it is in the low SNR scenario where we can see that the stability selection approach offers more robust estimates. The original SPFM struggles with the single tap events, while the stability-based SPFM approaches yield maps that are similar to the GLM analysis, specially when we add the integration operator to the formulation.

Figure 13: Estimates of the neuronal-related events for a finger-tapping paradigm in a low SNR scenario.

Each column shows a different moment in time of the finger tapping paradigm, with the three columns on the left belonging to single-tap events while the three columns on the right belong to multi-tap events.

Take-home messages

The combination of SPFM with stability selection offers more robust estimates of neuronal-related activity, especially at low SNR.

The addition of an integration operator allows to estimate innovation signals, yielding activity maps that are closer to the gold standard obtained with GLM trial-level analysis. Yet, the amplitude of the events might be underestimated, whereas their duration is overestimated.

Improvements due to the integration operator highly depend on the paradigm; e.g. tasks with block events would benefit more than short event-related trials.